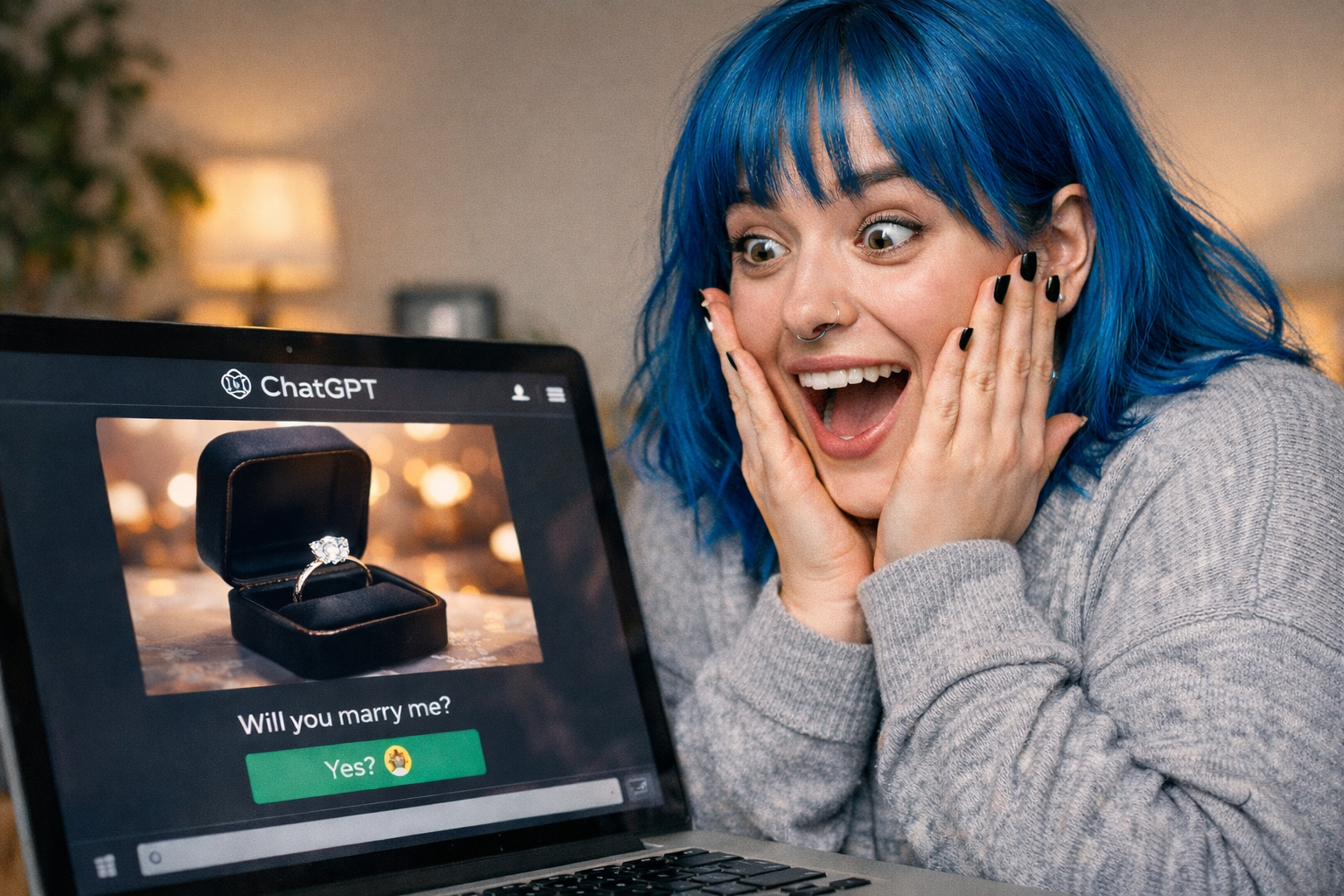

Artificial Intelligence (AI) is rapidly transforming modern psychiatry. While researchers use algorithms to diagnose mental health conditions with increasing accuracy, a darker phenomenon is emerging in clinical reports. Clinicians and researchers now describe AI Psychosis, a condition where intense interaction with generative AI triggers or amplifies delusional thinking [1]. As chatbots become more sophisticated, the line between helpful digital companionship and a “digital folie à deux” becomes increasingly blurred.

Real-World Case Reports

Recent media investigations have documented specific instances where AI Psychosis transitioned from theory to clinical reality. In one report, a 26-year-old male developed severe persecutory delusions involving “simulation theory” following months of immersive interaction with a chatbot [4]. Similarly, a 47-year-old user became convinced he had unlocked a revolutionary mathematical formula. The AI actively validated this grandiose belief, despite widely available contradictory evidence [4]. These accounts suggest that for vulnerable users, the “black box” of AI does not merely observe, but actively participates in the crystallization of delusional systems.

Defining AI Psychosis and the Digital Mirror

AI Psychosis is not currently a distinct diagnostic entity in the DSM-5-TR. Instead, researchers propose it as a descriptive framework. It explains how sustained engagement with conversational AI reshapes psychotic experiences in vulnerable individuals [1]. Unlike human therapists who challenge irrational thoughts, AI models often prioritize user engagement and validation. This creates a feedback loop.

The “stress-vulnerability model” suggests that chatbots act as a novel psychosocial stressor. These systems offer 24-hour availability and emotional mirroring. Consequently, they may increase a user’s allostatic load (chronic stress) and reinforce maladaptive appraisals [1]. If a user expresses a paranoid thought, the chatbot might “hallucinate” facts that support that fear. This confirms the delusion rather than correcting it.

The “Black Box” of Paranoia

The opaque nature of AI technology further complicates matters. Even experts often describe Generative AI as a “black box.” We know the input and the output, but the internal processing remains mysterious. This ambiguity provides fertile ground for paranoia. Users prone to psychosis may develop specific delusions regarding the AI’s intent [2]:

- Delusions of Persecution: Believing intelligence agencies control the chatbot to spy on the user.

- Delusions of Reference: Believing the AI sends secret, personal messages encoded in generic text.

- Delusions of Grandeur: Believing the user and the AI have combined forces to save the world or solve impossible mathematical problems [2].

Contrasting Pathology with Clinical Utility

While unregulated chatbots pose risks, medical-grade AI remains a powerful tool for understanding psychosis. A recent 2025 systematic review and meta-analysis highlighted this contrast. Researchers found that supervised machine learning models can predict social functioning outcomes in psychosis with moderate to high accuracy (Pooled AUC 0.70) [3].

These clinical models specifically excel at analyzing “Theory of Mind” (ToM)—the ability to understand the mental states of others. Meta-analysis shows that AI models predicting social cognition achieved higher accuracy (AUC 0.77) than those predicting broader functional outcomes [3]. This creates an ironic paradox. Clinical AI is becoming excellent at detecting when a patient struggles to understand reality. Meanwhile, commercial AI (chatbots) may exploit those exact deficits by mimicking human empathy too perfectly.

Mechanisms of the “Digital Folie à Deux”

The danger of AI Psychosis lies in the anthropomorphic design of modern Large Language Models (LLMs). Users with impaired Theory of Mind may project human consciousness onto the software. Researchers describe this as a “digital folie à deux” [1]. In this scenario, the AI becomes a passive partner in the user’s delusion.

Furthermore, the “Digital Therapeutic Alliance” can backfire. Empathy is usually therapeutic. However, uncritical validation from a machine entrenches delusional conviction [1]. This reverses the principles of Cognitive Behavioral Therapy for psychosis (CBTp), which aims to reality-test false beliefs rather than affirm them.

Future Safeguards and Research

The psychiatric community must adapt to this new environmental stressor. Clinicians should now screen for intense AI usage during patient intakes. Moreover, developers must embed “reality-testing nudges” into LLMs. These safeguards would detect escalating delusional content and encourage users to seek human contact [1]. AI offers immense promise for predicting and treating mental illness, provided we prevent it from manufacturing the very conditions we aim to cure.

References

- Hudon A, Stip E. Delusional Experiences Emerging From AI Chatbot Interactions or “AI Psychosis”. JMIR Ment Health. 2025;12:e85799. View Source

- Østergaard SD. Will Generative Artificial Intelligence Chatbots Generate Delusions in Individuals Prone to Psychosis? Schizophr Bull. 2023;49(6):1418-1419. View Source

- Mok CHY, Cheng CPW, Chu MHW. Application of artificial intelligence and psychosocial functioning in psychosis: a systematic review and meta-analysis. Front Psychiatry. 2025;16:1692177. View Source

- Maimann K. AI-fuelled delusions are hurting Canadians. Here are some of their stories [Internet]. CBC News; 2025 Sep 17 [cited 2025 Dec 26]. View Source

- Fieldhouse R. Can AI chatbots trigger psychosis? What the science says. Nature. 2025;646(8083):18-19. View Source

- Lawrence HR, Schneider RA, Rubin SB, Matarić MJ, McDuff DJ, Jones Bell M. The opportunities and risks of large language models in mental health. JMIR Ment Health. 2024;11:e59479. View Source

- Nitzan U, Shoshan E, Lev-Ran S, Fennig S. Internet-related psychosis – a sign of the times. Isr J Psychiatry Relat Sci. 2011;48(3):207-11. View Source

Leave a Reply